There are a plethora of data analytics courses that big data enthusiasts are picking up these days. No wonder there, given the immense promise these courses have and the scale of penetration of big data technologies in industries across the world.

Let’s take a look at the most popular techniques such courses cover:

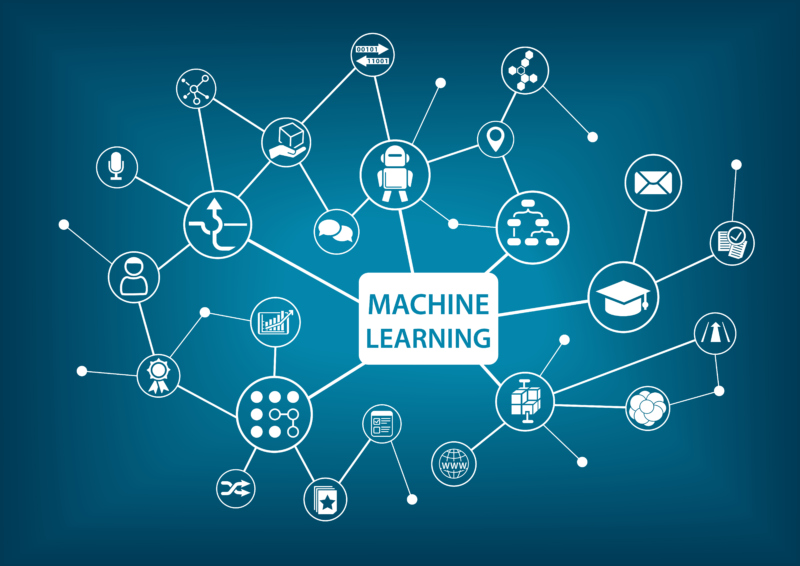

- Predictive analysis: It includes software or/and hardware solutions that let organizations find, evaluate, optimize and execute predictive models. Such analysis is done on big data sources to predict unknown future events. Various strategies such as data mining, modeling, statistics, machine learning, and artificial intelligence are employed to do that.

- NoSQL databases: It is a mechanism to store and retrieve data. The different database technologies it encompasses are developed in response to the demands for building modern-day applications. As compared to relational databases, NoSQL databases are more scalable and provide better performance.

- Stream analytics: With stream analytics, one can rapidly develop and deploy inexpensive solutions to obtain real-time insights from sensors, devices, infrastructure and applications. It is generally used for Internet of Things (IoT) scenarios; for example, doing real-time remote management and monitoring or getting insights from devices like mobile phones, connected cars, and even smart coffee machines.

- In-memory data fabric: It allows you to do real-time streaming, high-performance transactions, and fast analytics in a single processing layer that enables comprehensive data access as well.

In can easily power existing as well as new applications in a distributed, massively-parallel architecture on low-cost, industry-standard hardware. It provides a data environment that is safe, highly available and manageable. It also lets organizations process full ACID transactions and extract insights from real-time, interactive as well as batch queries.

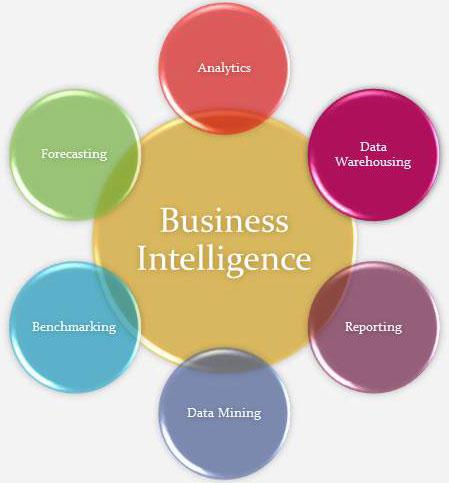

- Data virtualization: It is an agile approach for data integration that companies use to gather more insight from big data. It responds to ever-changing analytics and business intelligence requirements. The streamlined approach of data virtualization decreases complexity and saves up to 50-75% money.

- Data integration: As the name suggests, it means compiling data from various sources that are then stored with the help of various technologies and then give a unified view of the data. In the scenario of mergers and consolidations of companies, such technology provides incomparable benefits in viewing their combined data asset. Such initiative is also called a data warehouse.

- Data preparation: Also known as data pre-processing, it is a context that involves manipulation of data to make it suitable for further processing and analysis. This task cannot be fully automated because it involves several tasks of varied nature. Successful data mining is not possible without data preparation.

A proficient data analyst would have mastered at least some of these techniques in order to be of value to organizations that employ big data technologies. If the course you are pursuing is not covering all of these, perhaps it is time to enroll yourself into an add-on course to get that competitive edge.

Is there something that we are missing from the list here? Share in the comments section.